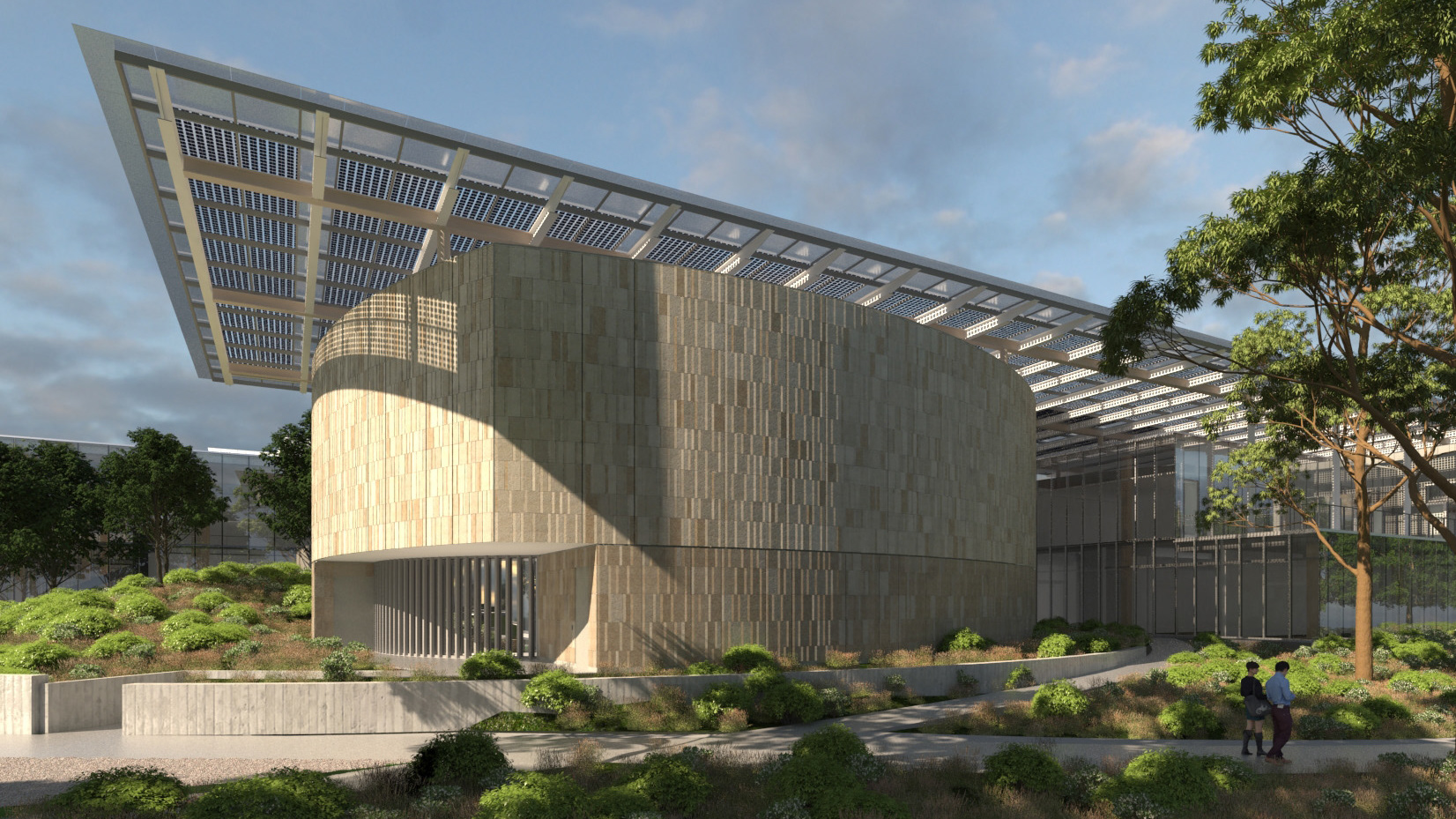

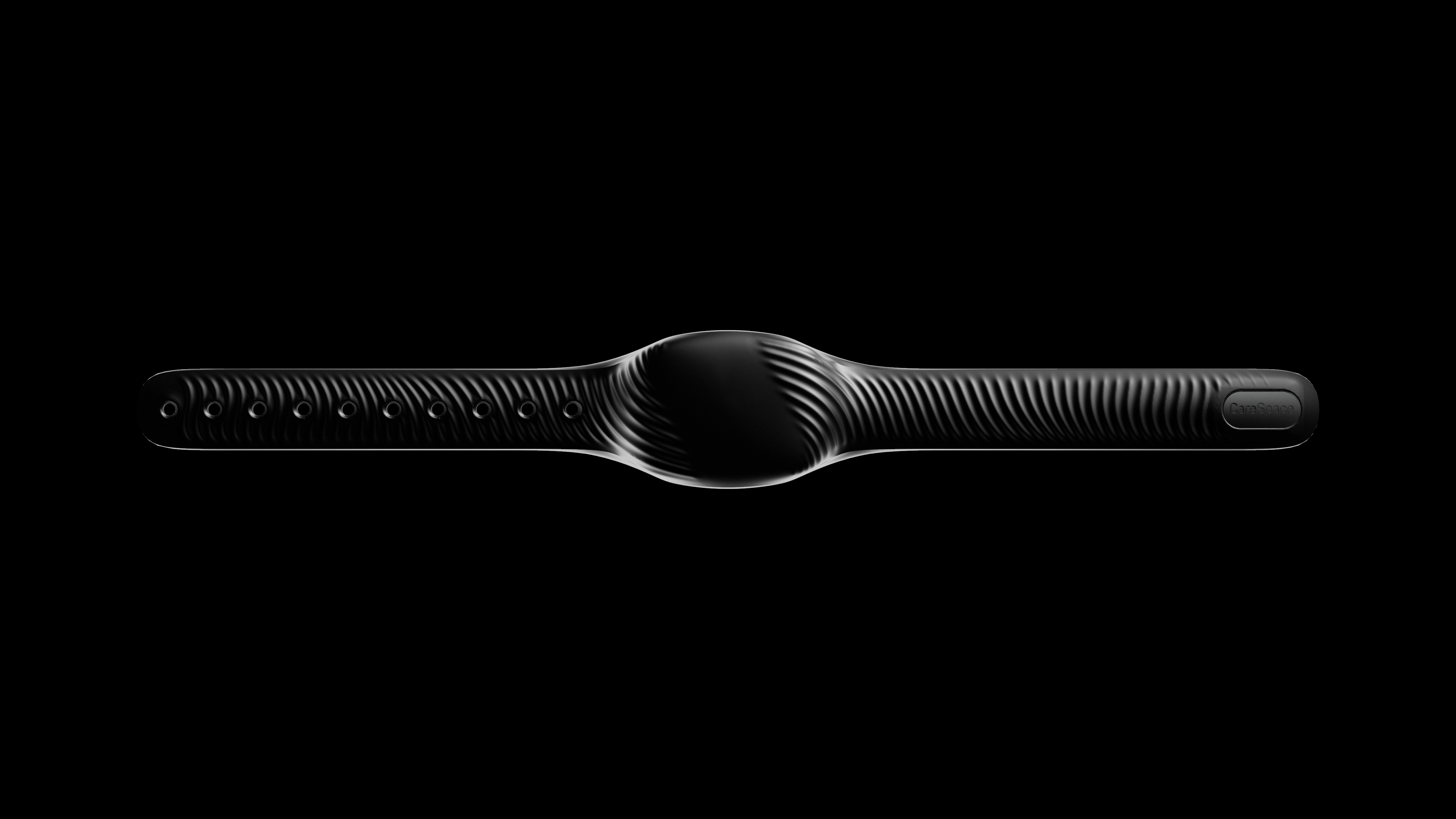

Design and development of the first VR/AR interface to be natively integrated within CAD software. Specifically, this is a plug-in for Rhino and Solidworks that allows a user to experience, model, and edit a project in a physical way but digital space. Mindesk fully immerses a user in the virtual environment with 6 degrees of freedom. There is no need to export, download or import the 3D models, as a continuous feedback loop allows users to go between desktop and AR/VR seemliness. Collaboration can be done in real-time in both interfaces. The goal was to develop an uninterrupted virtual environment to harness the natural sense of hand movement and perception of space for a powerful sense of control and feel. Mindesk is also able to work with live parametric Grasshopper definitions.

Before joining the team, I was an early adopter of the pilot software at an architecture firm. I was so fascinated with the idea of being able to 3D model with my hands, I had to join the team. While helping develop the UX/UI, I found that implementing user feedback was one of the most effective ways to work through new interfaces and bugs. Additionally, as we unrolled the software updates and adjusted accordingly, we still gained much knowledge in our experience trying to teach the software in person and via online tutorials. The VR space can be disorienting so having natural, intuitive motions be the drivers of the modeling tools was essential.

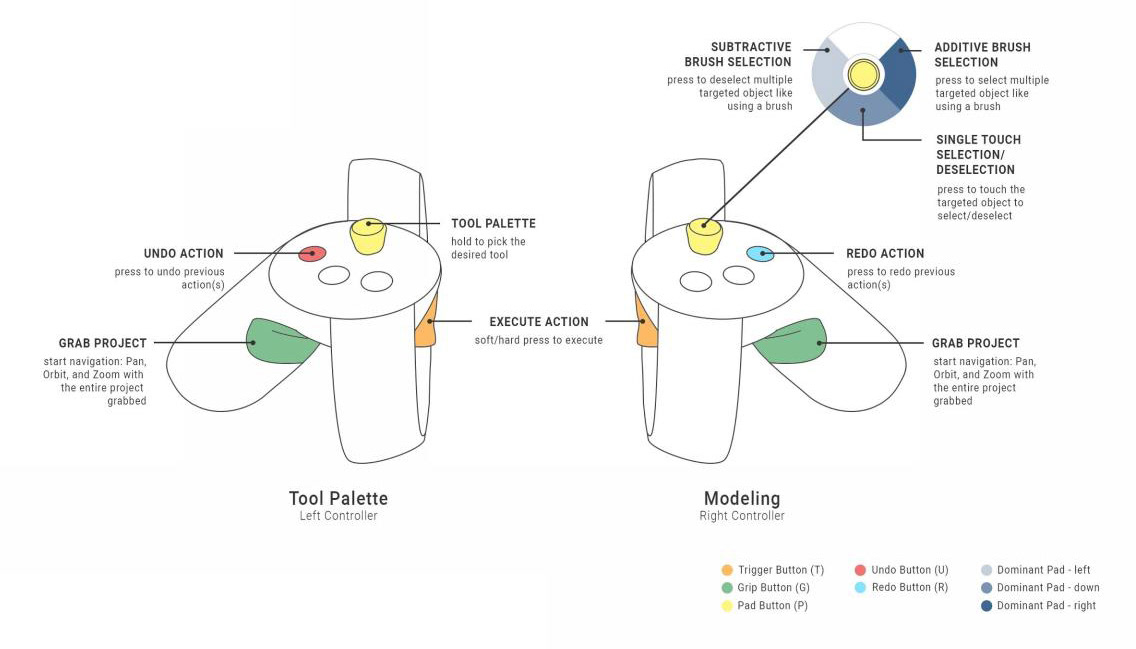

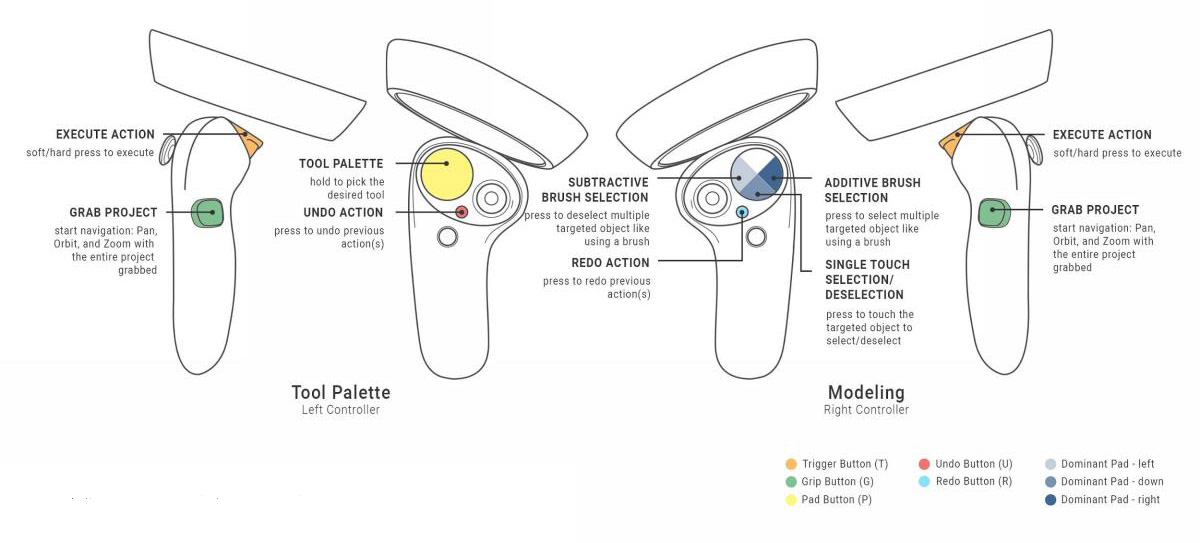

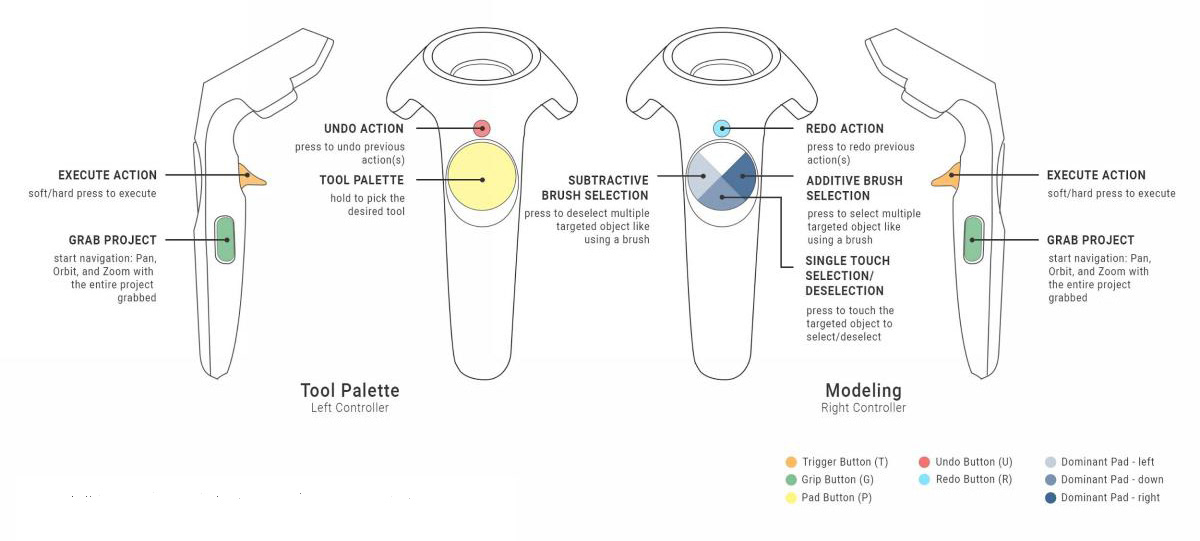

In early development, navigation adjustments proved crucial to the speed and ease of using the tool. Within the headset, a user is able to rotate a model, fly around and quickly adjust scale accurately. The key was to integrate the current Rhino workflow into VR which includes: general navigation, multi-scale view, geometric NURBS editing, surface/curve modeling, and more. Mindesk works on HTC Vive, Oculus Rift/Quest, Windows Mixed Reality, and Meta 2.0 devices. The control commands for the various devices are constantly subject to change and are dependent on the hardware as it continues to evolve. Ultimately the next step was to start working on eliminating physical controls and having strategic hand motions to adopt for all platforms and devices.